Set of Current Bodies

The following section introduces the vocabulary of the extractors that are currently implemented in the MICO platform. More specifically, for every extractor, the corresponding annotation/content part structure, its bodies, and possible selection features will be covered. For the sake of clarity, the annotation will not be represented in an extraction workflow process, but only on its own. The selection information for a content part is supported, when the given extraction process selects only a subpart of its input.

For the implemented extractors we introduced an own ontology namespace mmmterms Mico Metadata Model Terms.

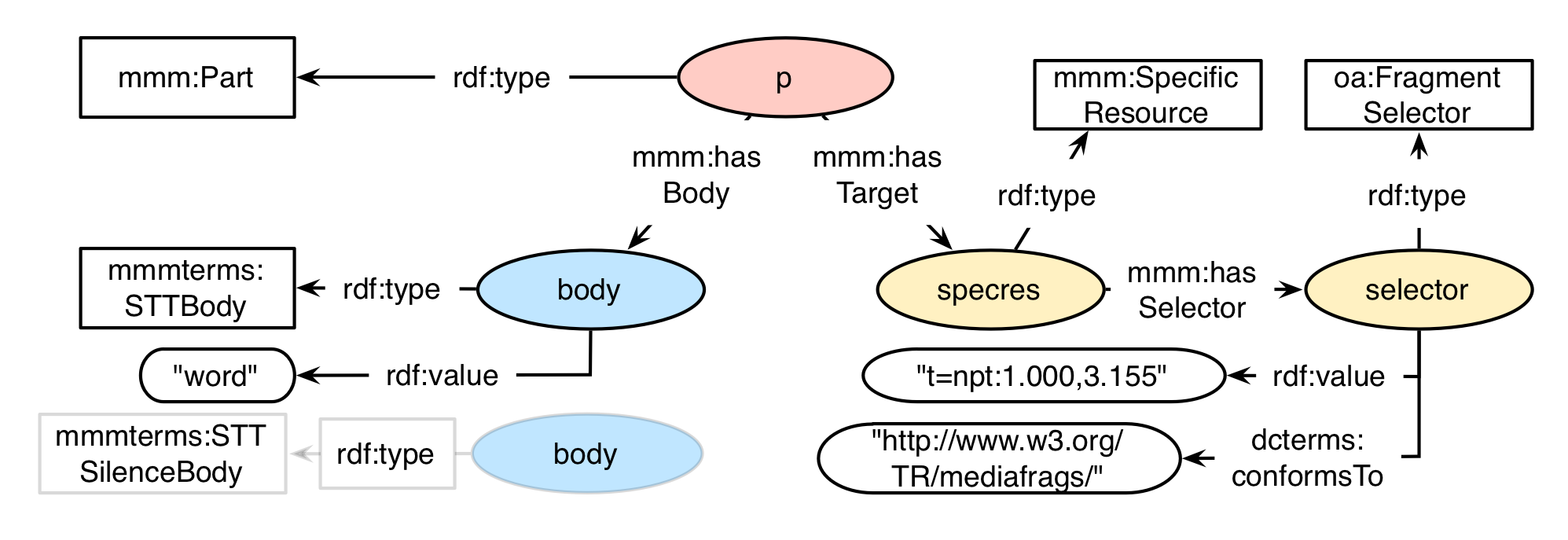

Speech To Text - STT

A speech to text analysis takes an audio file as input and finds the fragments of the audio stream containing words. The output is an XML file, listing an entry for every word and silent fractions.

Vocabulary

| Item | Type | Description |

|---|---|---|

| mmmterms:STTBody | Class | Subclass of mmm:Body. The body class for a speech to text body, containing a word. MUST have exactly one property rdf:value associated, containing the detected word. |

| mmmterms:STTSilenceBody | Class | Subclass of mmm:Body. The body class for a speech to text body indicating silence. |

shows an example of an annotation for a found word. The body contains the actual found word as a literal, the target is further specified by a TemporalFragmentSelector that selects a range of milliseconds.

Serialization

<urn:p> a mmm:Part ;

mmm:hasBody <urn:body> ;

mmm:hasTarget <urn:specres> .

<urn:body> a mmmterms:STTBody ;

rdf:value "word" .

<urn:specres> a mmm:SpecificResource ;

mmm:hasSelector <urn:selector> .

<urn:selector> a oa:FragmentSelector ;

rdf:value "t=npt:1.000,3.155" ;

dcterms:conformsTo "http://www.w3.org/TR/mediafrags/" .

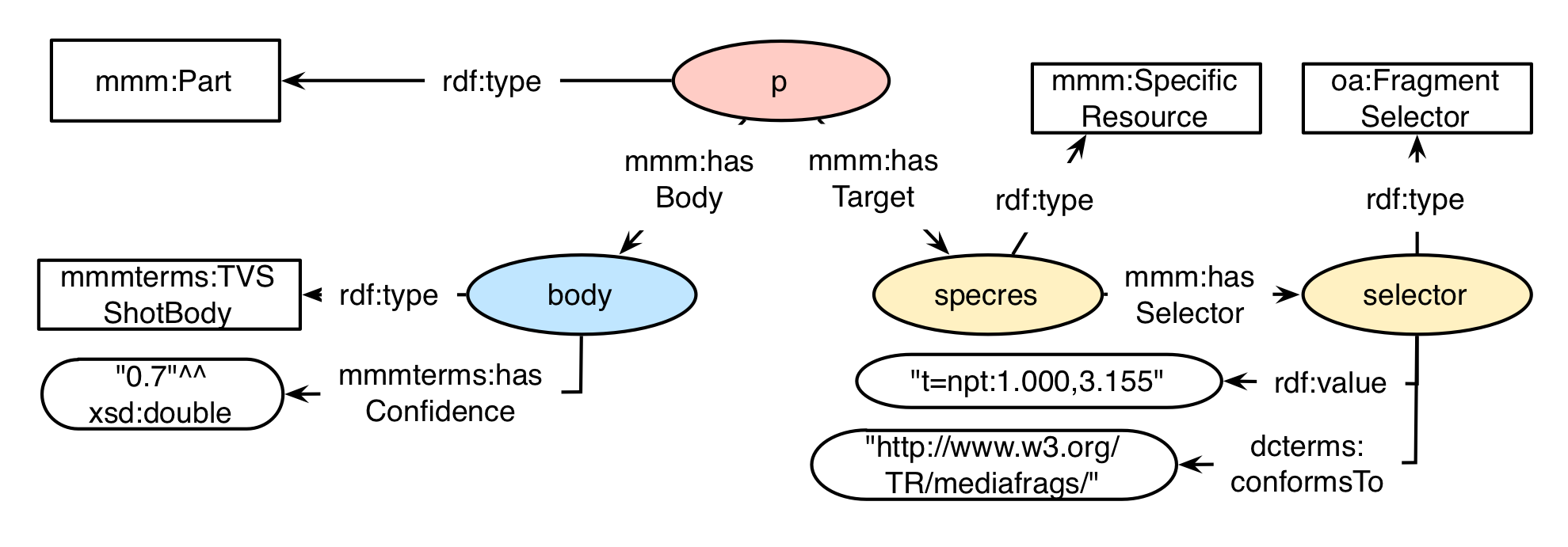

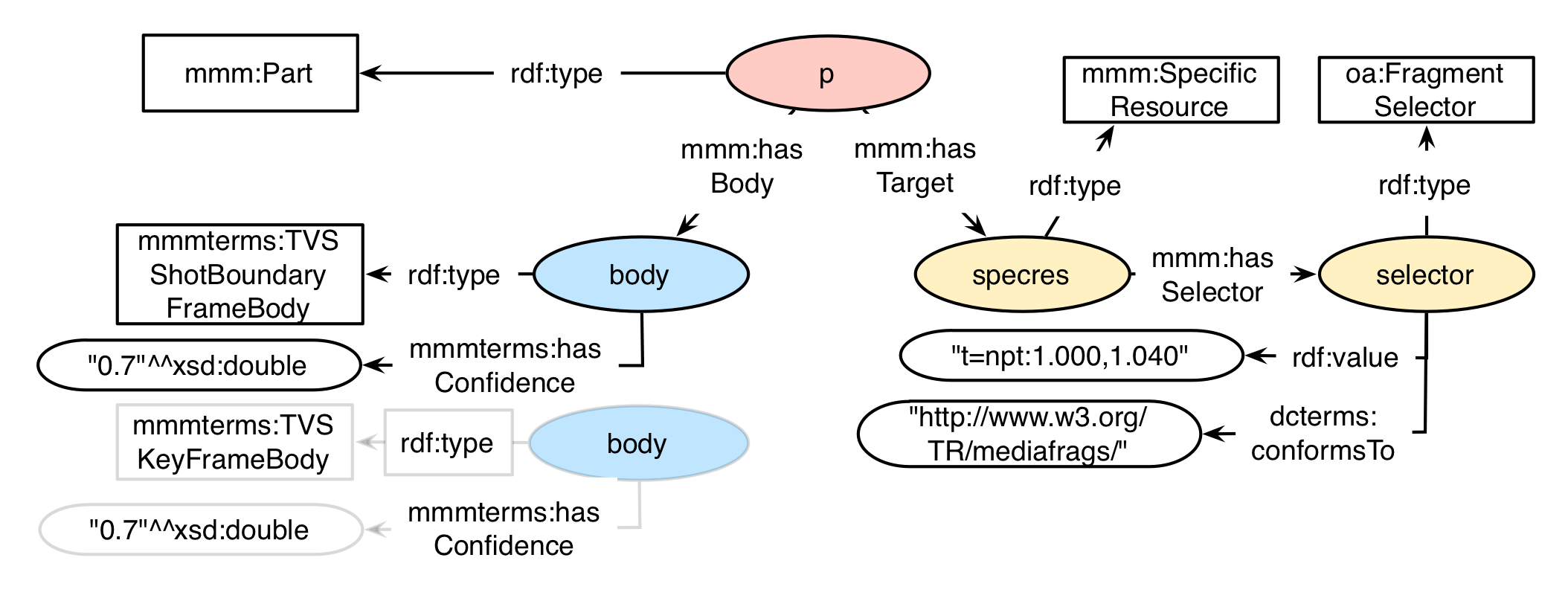

Temporal Video Segmentation - TVS

The Temporal Video Segmentation finds keyframes and shots in a video stream in order to split the whole video into meaningful smaller pieces. For a shot, the boundary frame at the start of the shot is also persisted as a seperate annotation.

Vocabulary

| Item | Type | Description |

|---|---|---|

| mmmterms:TVSBody | Class | Subclass of mmm:Body. Superclass for the temporal video segmentation bodies. MUST have a property mmmterms:hasConfidence associated, indicating the confidence of the recognized shot or keyframe. |

| mmmterms:TVSShotBody | Class | Subclass of mmmterms:TVSBody. The body class for a shot of a temporal video segmentation. |

| mmmterms:TVSKeyFrameBody | Class | Subclass of mmmterms:TVSBody. The body class for a keyframe of a temporal video segmentation. |

| mmmterms:TVSShotBoundaryFrameBody | Class | Subclass of mmmterms:TVSBody. The body class for a shot boundary. |

| mmmterms:hasConfidence | Property | Property of various mmm:Body instances. Specifies, how confident a party is in its supported result. Range is a literal typed with "^^xsd:double". |

An example for a shot is shown in . A shot contains several frames, indicated by its temporal fragment.

Serialization

<urn:p> a mmm:Part ;

mmm:hasBody <urn:body> ;

mmm:hasTarget <urn:specres> .

<urn:body> a mmmterms:TVSShotBody ;

mmmterms:hasConfidence "0.7" .

<urn:specres> a mmm:SpecificResource ;

mmm:hasSelector <urn:selector> .

<urn:selector> a oa:FragmentSelector ;

rdf:value "t=npt:1.000,3.155" ;

dcterms:conformsTo "http://www.w3.org/TR/mediafrags/" .

An example for a keyframe is shown in figure . Keyframes do only target one frame of the video, so the temporal fragment does only contain a short period, depending on the FPS value of the video.

Serialization

<urn:p> a mmm:Part ;

mmm:hasBody <urn:body> ;

mmm:hasTarget <urn:specres> .

<urn:body> a mmmterms:TVSShotBoundaryFrameBody ;

mmmterms:hasConfidence "0.7" .

<urn:specres> a mmm:SpecificResource ;

mmm:hasSelector <urn:selector> .

<urn:selector> a oa:FragmentSelector ;

rdf:value "t=npt:1.000,1.040" ;

dcterms:conformsTo "http://www.w3.org/TR/mediafrags/" .

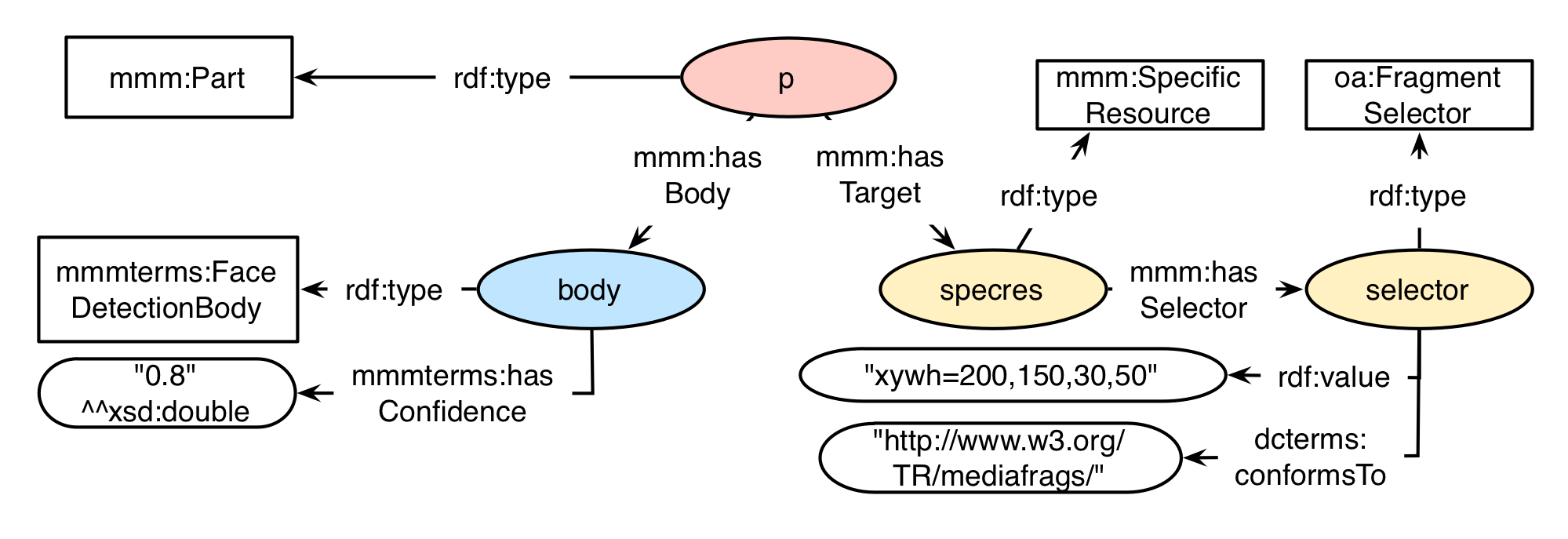

Face Detection - FD

Face detection extractors detect (not recognize) faces in pictures. The result is supported by a spatial oa:FragmentSelector, which indicates at which portion of the picture a face was detected, combined with a confidence value (mmmterms:hasConfidence property at body level).

Vocabulary

| Item | Type | Description |

|---|---|---|

| mmmterms:FaceDetectionBody | Class | Subclass of mmm:Body. The body class for a face detection. MUST have a property mmmterms:hasConfidence associated, indicating the confidence of the extractor. |

The example in shows an exemplary mmm:Part which is created by a face detection extractor. In this case, the face was detected at a rectangular area, starting at pixel (200/150), with a width of 30 pixels and a height of 50 pixels.

Serialization

<urn:p> a mmm:Part ;

mmm:hasBody <urn:body> ;

mmm:hasTarget <urn:specres> .

<urn:body> a mmmterms:FaceDetectionBody ;

mmmterms:hasConfidence "0.8" .

<urn:specres> a mmm:SpecificResource ;

mmm:hasSelector <urn:selector> .

<urn:selector> a oa:FragmentSelector ;

rdf:value "xywh=200,150,30,50" ;

dcterms:conformsTo "http://www.w3.org/TR/mediafrags/" .

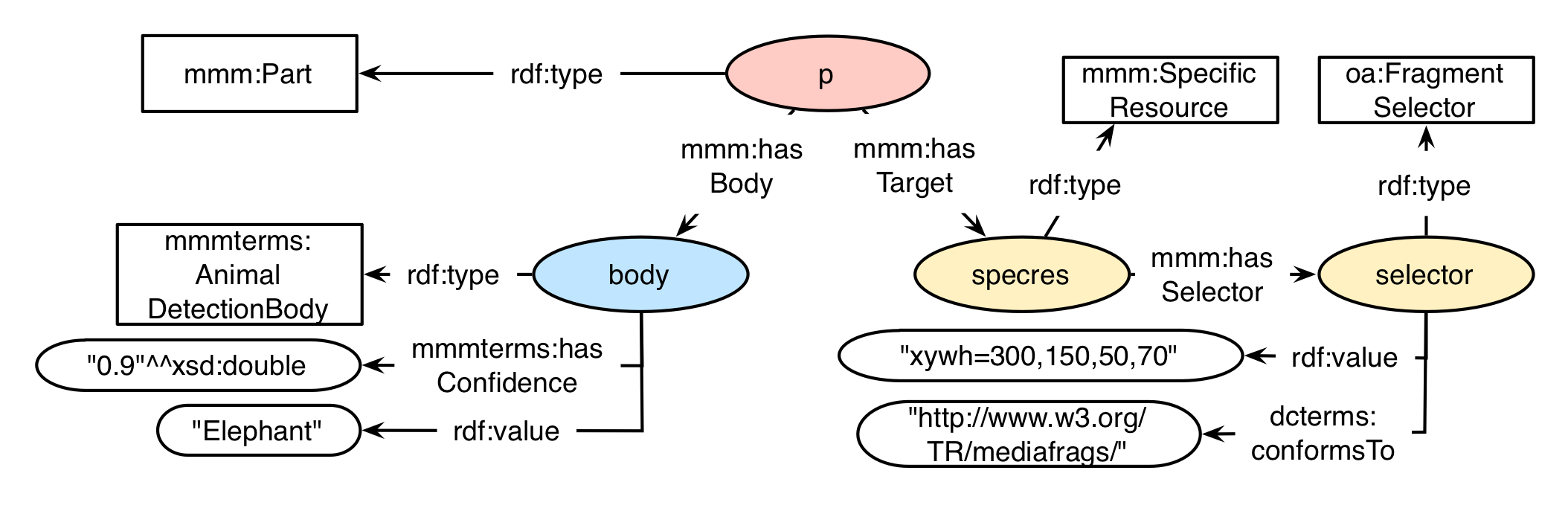

Animal Detection - AD

Animals are detected in pictures, and also kind of recognised, as a kind of the animal is also added via the rdf:value property. The confidence of the extractor is added via the property mmmterms:hasConfidence. A spatial oa:FragmentSelector indicates the position of the animal in the picture.

Vocabulary

| Item | Type | Description |

|---|---|---|

| mmmterms:AnimalDetectionBody | Class | Subclass of mmm:Body. The body class for a detected animal. MUST have a property mmmterms:hasConfidence associated, indicating the confidence of the extractor. MUST have a property rdf:value for the type of animal associated. |

illustrates an exemplary mmm:Part fraction of a animal detection extractor. An "Elephant" (property rdf:value) has been detected in the rectangle starting at pixel (300/150) with a width of 50 pixels and a height of 70 pixels (indicated by the oa:Selector "selector"). The extractor is confident to 90%, indicated by the property mmmterms:hasConfidence.

Serialization

<urn:p> a mmm:Part ;

mmm:hasBody <urn:body> ;

mmm:hasTarget <urn:specres> .

<urn:body> a mmmterms:AnimalDetectionBody ;

mmmterms:hasConfidence "0.9" .

<urn:specres> a mmm:SpecificResource ;

mmm:hasSelector <urn:selector> .

<urn:selector> a oa:FragmentSelector ;

rdf:value "xywh=300,150,50,70" ;

dcterms:conformsTo "http://www.w3.org/TR/mediafrags/" .

Fusepool Annotations

The Fusepool Annotation Model (FAM) defines several annotations for textual content. It supports user level annotations like named entities, topics, sentiment, as well as machine level annotations like tokens, POS tags, lemmas, or stems. For a detailed description of the model and its vocabulary elements, see the FAM specification. The Following annotations are added to the MICO ontology:

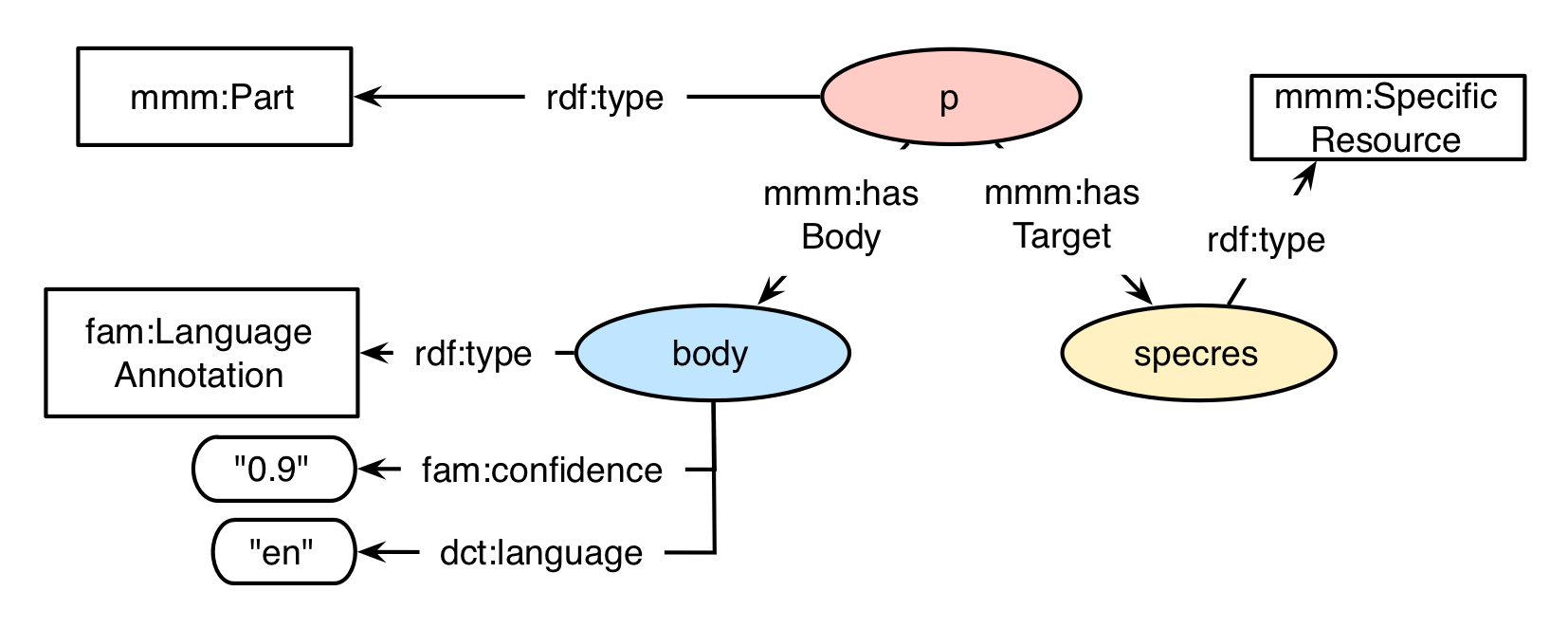

Language Annotation

A Language Annotation (fam:LanguageAnnotation) is used to annotate the language of the parsed content or even the language of a part of the parsed content.

Serialization

<urn:p> a mmm:Part ;

mmm:hasBody <urn:body> ;

mmm:hasTarget <urn:specres> .

<urn:body> a fam:LanguageAnnotation ;

dct:language "en" ;

fam:confidence "0.9" .

<urn:specres> a mmm:SpecificResource ;

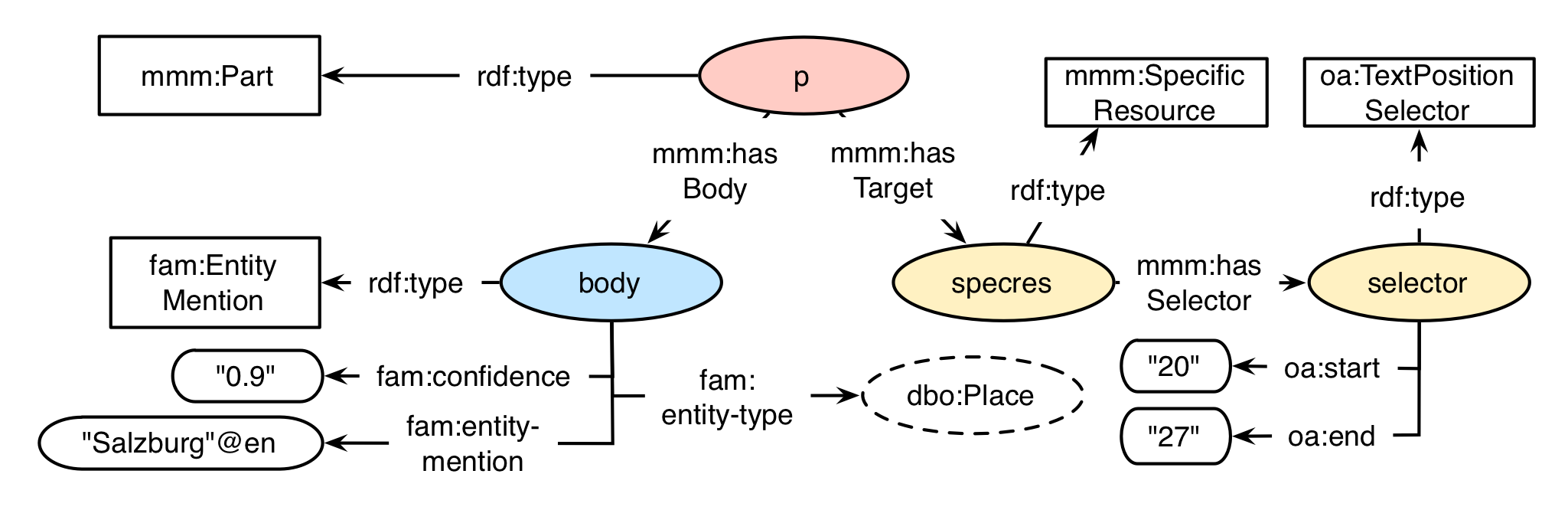

Entity Mention Annotation

An Entity Mention Annotation is typically used for results of Named Entity Recognition (NER) components. This annotation provides the mention and the type of the entity. It also expects a selector that marks the section of the text mentioning the entity. If an entity is mentioned multiple times in one text, the part for the annotation can contain multiple targets with respective selectors.

Serialization

<urn:p> a mmm:Part ;

mmm:hasBody <urn:body> ;

mmm:hasTarget <urn:specres> .

<urn:body> a fam:EntityMention ;

fam:entity-type dbo:Place ;

fam:entity-mention "Salzburg"@en ;

fam:confidence "0.9" .

<urn:specres> a mmm:SpecificResource ;

mmm:hasSelector <urn:selector> .

<urn:selector> a oa:TextPositionSelector ;

oa:start "20" ;

oa:end "27" .

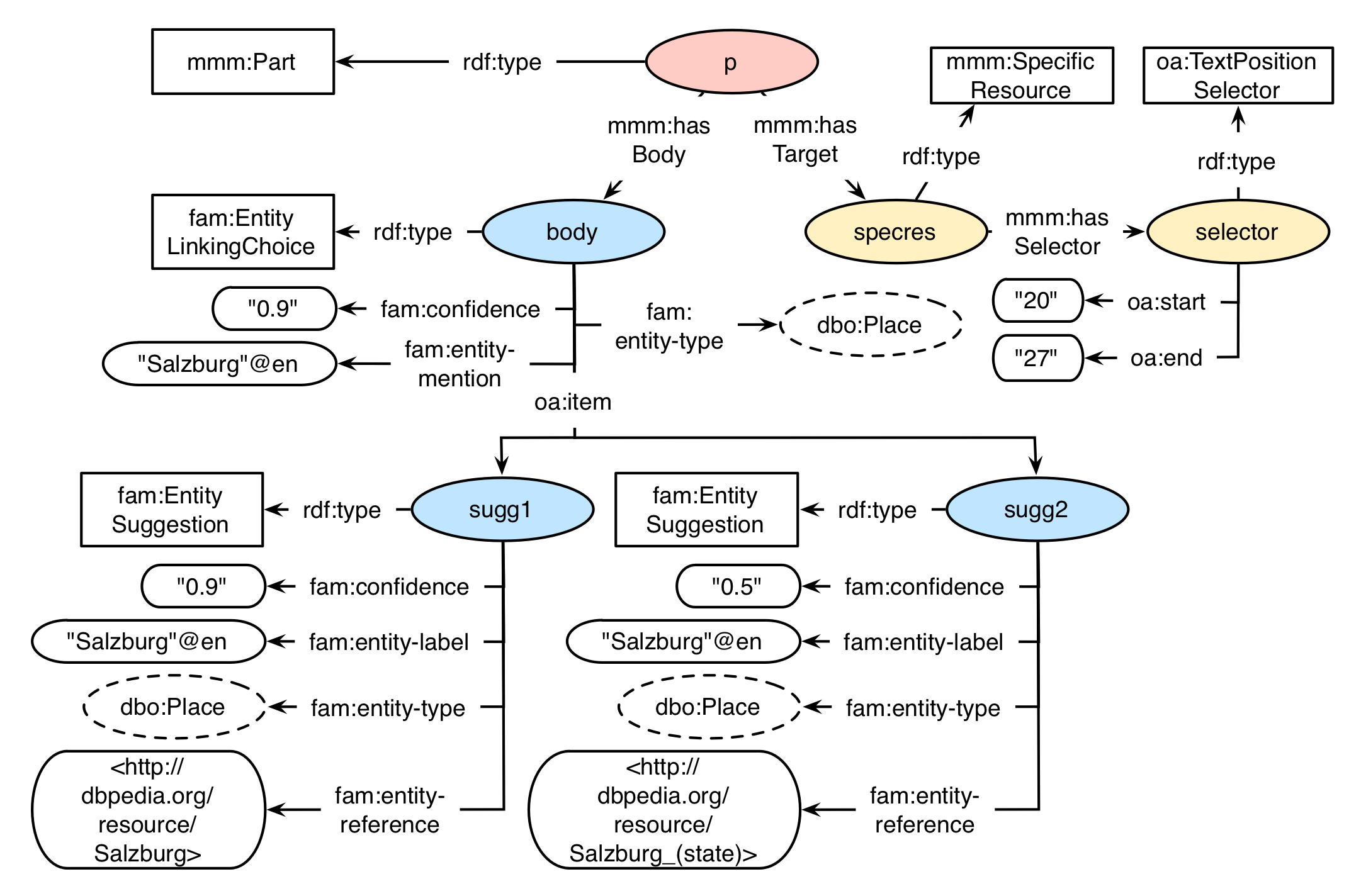

Linked Entity Annotation

In cases where the NER extractor has multiple linking options and can not disambiguate in-between them, it is possible to use the fam:EntityLinkingChoice annotation. In this case the extractor first creates a fam:EntityLinkingChoice for the mention and multiple fam:EntitySuggestion for the different linking options. The oa:item relationship is used to link between the choice instance and the multiple suggestions.

Serialization

<urn:p> a mmm:Part ;

mmm:hasBody <urn:body> ;

mmm:hasTarget <urn:specres> .

<urn:body> a fam:LinkedEntityChoice ;

fam:entity-type dbo:Place ;

fam:entity-mention "Salzburg"@en ;

fam:confidence "0.9" ;

oa:item <urn:sugg1>, <urn:sugg2> .

<urn:sugg1> a fam:EntitySuggestion ;

fam:confidence "0.9" ;

fam:entity-label "Salzburg"@en ;

fam:entity-type dbo:Place ;

fam:entity-reference <http://dbpedia.org/resource/Salzburg> .

<urn:sugg2> a fam:EntitySuggestion ;

fam:confidence "0.5" ;

fam:entity-label "Salzburg"@en ;

fam:entity-type dbo:Place ;

fam:entity-reference <http://dbpedia.org/resource/Salzburg_(state)> .

<urn:specres> a mmm:SpecificResource ;

mmm:hasSelector <urn:selector> .

<urn:selector> a oa:TextPositionSelector ;

oa:start "20" ;

oa:end "27" .

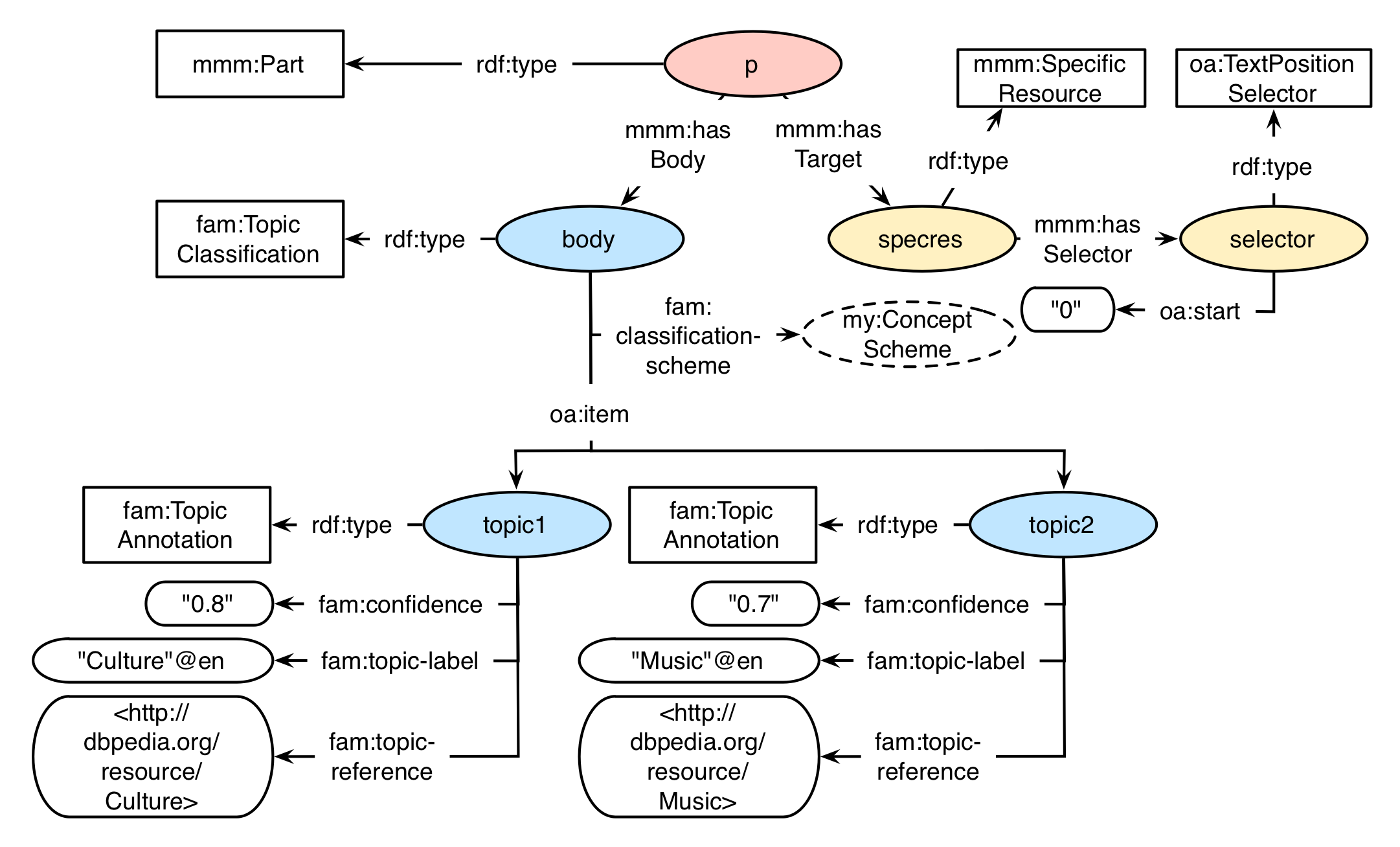

Topic Classification

A Topic Classification classifies the processed content along a classification scheme. Such a scheme can be defined by a controlled vocabulary, so each topic can be identified by its IRI. However also string labels for topics are supported. A topic classification consists of a single fam:TopicClassification with one of more linked fam:TopicAnnotation. This allows to separate multiple content classification potentially generated by different extractors. In most of the use cases it will however be sufficient to just look for fam:TopicAnnotation instances of a given mmm:Item.

Serialization

<urn:p> a mmm:Part ;

mmm:hasBody <urn:body> ;

mmm:hasTarget <urn:specres> .

<urn:body> a fam:TopicClassification ;

fam:classification-scheme my:ConceptScheme ;

oa:item <urn:topic1>, <urn:topic2> .

<urn:topic1> a fam:TopicAnnotation ;

fam:confidence "0.8" ;

fam:entity-label "Culture"@en ;

fam:entity-reference <http://dbpedia.org/resource/Culture> .

<urn:topic2> a fam:TopicAnnotation ;

fam:confidence "0.7" ;

fam:entity-label "Music"@en ;

fam:entity-reference <http://dbpedia.org/resource/Music> .

<urn:specres> a mmm:SpecificResource ;

mmm:hasSelector <urn:selector> .

<urn:selector> a oa:TextPositionSelector ;

oa:start "0" .

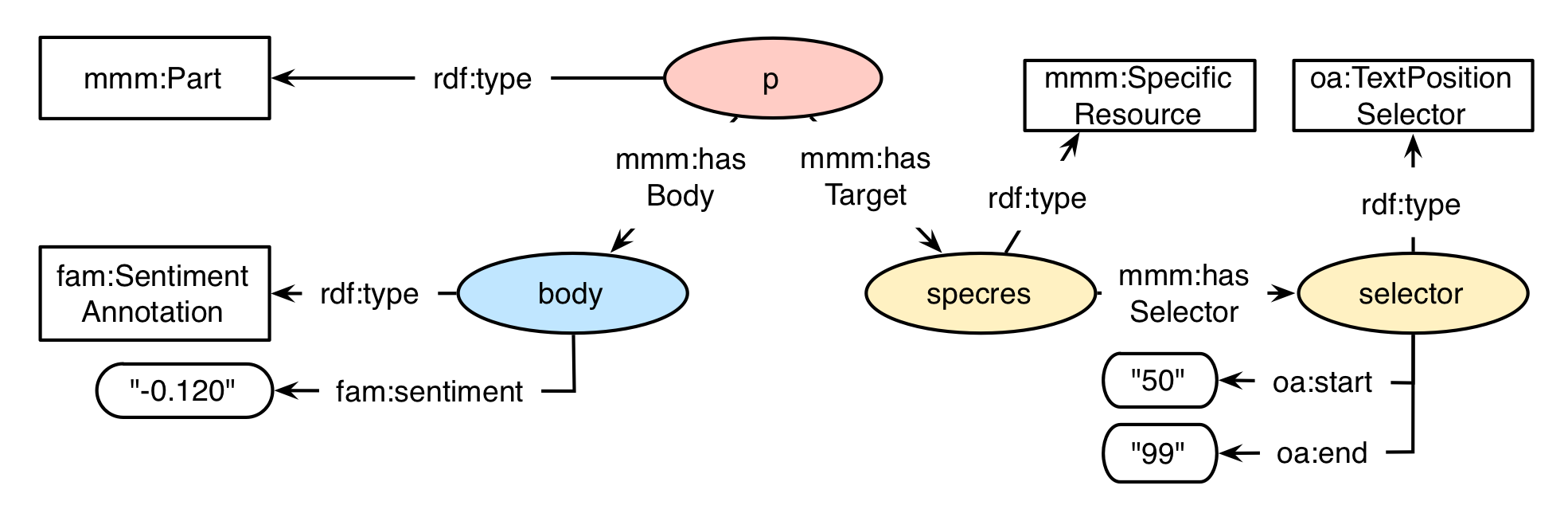

Sentiment Annotation

A Sentiment Annotation describes the sentiment of the document or a selection of the document. The fam:sentiment is expressed by a numeric value with the range [-1..+1], where 0 stands for neutral and higher values indicate a more positive sentiment.

Serialization

<urn:p> a mmm:Part ;

mmm:hasBody <urn:body> ;

mmm:hasTarget <urn:specres> .

<urn:body> a fam:SentimentAnnotation ;

fam:sentiment "-0.120" .

<urn:specres> a mmm:SpecificResource ;

mmm:hasSelector <urn:selector> .

<urn:selector> a oa:TextPositionSelector ;

oa:start "50" ;

oa:end "99" .